AI systems and large language models are more accessible than ever, thanks to open-source projects and API-based solutions. But getting them to deliver accurate, reliable results often requires fine-tuning, prompt engineering, or integrating external data sources. Without customization, their responses can be inconsistent or error-prone.

Frameworks like LangChain help streamline LLM-based application development by connecting models with external data for tasks like chatbots and knowledge retrieval. However, many companies adopt LangChain simply because it’s widely known – not realizing that leaner, more efficient alternatives exist. While LangChain can be useful, its performance overhead and complexity often make it a less-than-ideal choice.

In our article, instead of focusing on Langchain definition and applications, you’ll discover and compare Langchain alternatives. Langchain isn’t the only option for LLM applications – find out what might work better for your project.

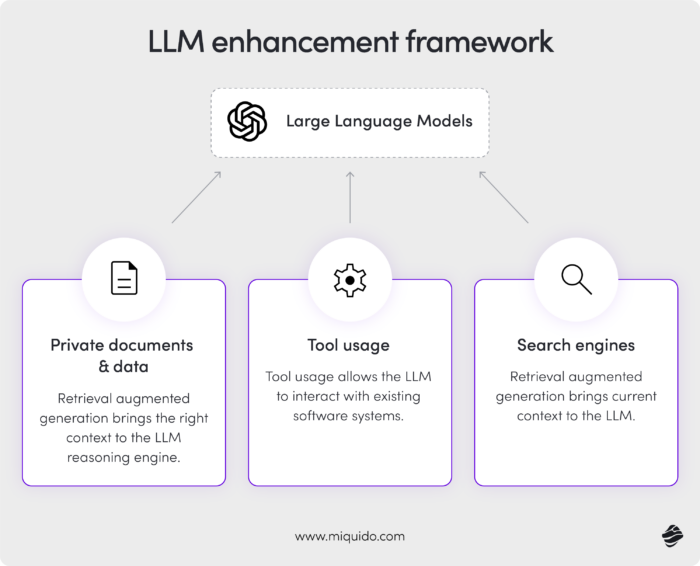

LLM frameworks like Langchain enable your language model to interact with the existing systems and bring the right and current context to it.

Why look for Langchain alternatives?

If you’re a developer, you might find LangChain’s complexity and performance overhead slowing down your application – especially if your project doesn’t require extensive chaining mechanisms. Many users struggle with limited documentation, rigid integrations, and debugging challenges, leading them to explore lighter, more flexible frameworks like LlamaIndex, Draive, or Haystack. These Langchain alternatives offer streamlined implementations with greater control over LLM interactions. Many of these alternatives also offer low-code platforms that allow users to automate complex workflows, enhancing operational efficiency and integrating various AI models and tools.

As a CEO, concerns about hidden costs, vendor lock-in, and scalability are valid. LangChain’s reliance on external APIs can drive up expenses over time, and its all-in-one approach may limit long-term flexibility. Depending on your enterprise needs, building in-house AI solutions using proven frameworks or opting for simple workflow automation tools like Make or n8n could be a smarter investment. In many cases, custom AI development isn’t even necessary – efficient automation can often achieve the same results at a lower cost.

From a product manager’s perspective, Langchain can be slow, clunky, and over-engineered for simple use cases, despite its usable prompt templates. Switching to a faster, more intuitive Langchain alternative could improve both development speed and user experience. It can save you from refactoring as your AI agent grows beyond basic chains, reducing overall expenses.

The biggest challenges of using Langchain

LangChain is a popular choice for working with large language models, but it comes with challenges that can slow teams down. Its rigid structure can make integrations more complex than expected, especially when managing complex workflows, scaling can introduce performance bottlenecks, and inefficient resource handling can quickly increase costs. Many teams start with LangChain hoping for a smooth development process, only to run into unexpected limitations.

Below are the key challenges teams frequently encounter – and why many are actively exploring LangChain alternatives.

1. Rigid and complex integrations

LangChain enforces a structured approach that isn’t always flexible enough for real-world projects. Instead of seamlessly integrating with custom APIs, databases, or proprietary tools, developers often have to work within LangChain’s predefined abstractions.

This adds unnecessary complexity – something as simple as an API call might require fitting into LangChain’s framework, introducing extra dependencies and limiting control.

For teams that prioritize flexibility, this rigidity can slow down development rather than streamline it.

2. Scalability challenges

LangChain works well for small-scale applications, but scaling up can be a struggle. Its reliance on multiple components – such as chains and memory storage – can create bottlenecks as demand increases.

A chatbot or retrieval system might perform well with a handful of users, but as queries grow, response times can slow, and infrastructure costs can spike. Many teams find that leaner frameworks handle scaling more efficiently, reducing both complexity and expense.

In contrast, many alternatives to LangChain offer leaner, more modular systems that scale more efficiently and affordably.

3. Hidden costs and high resource consumption

Although LangChain is open-source, using it efficiently can lead to unexpected costs. Many implementations rely on external APIs, cloud storage, and databases, which add up quickly. LangChain’s handling of model calls and memory can also lead to excessive token usage – driving up API costs unnecessarily.

For example, a company using LangChain with OpenAI’s GPT-4o might see costs skyrocket due to redundant queries and inefficient memory management. A more direct approach is often far more cost-effective.

4. Steep learning curve and developer frustration

Despite its modular design, LangChain can be difficult to learn – especially for teams that just need straightforward LLM functionality. While the documentation is extensive, it’s not always beginner-friendly or well-structured for advanced use cases. For many teams, LangChain adds unnecessary complexity without delivering enough value in return.

5. Overdependence on the LangChain ecosystem

LangChain encourages developers to structure applications around its unique workflows, which can speed up early development but lead to long-term lock-in. If LangChain’s development slows down or shifts direction, businesses may find themselves stuck with an ecosystem that no longer meets their needs.

Migrating away from LangChain often requires major refactoring – something that could have been avoided by choosing a more flexible approach from the start.

That’s why teams increasingly consider LangChain alternatives that offer more flexibility, portability, and control from day one.

Top Langchain alternatives

Top Langchain alternatives

Concerned that LangChain’s complexity might slow down your project’s development? Or perhaps you’re looking to reduce churn risks caused by the performance issues often associated with LangChain in the developer community?

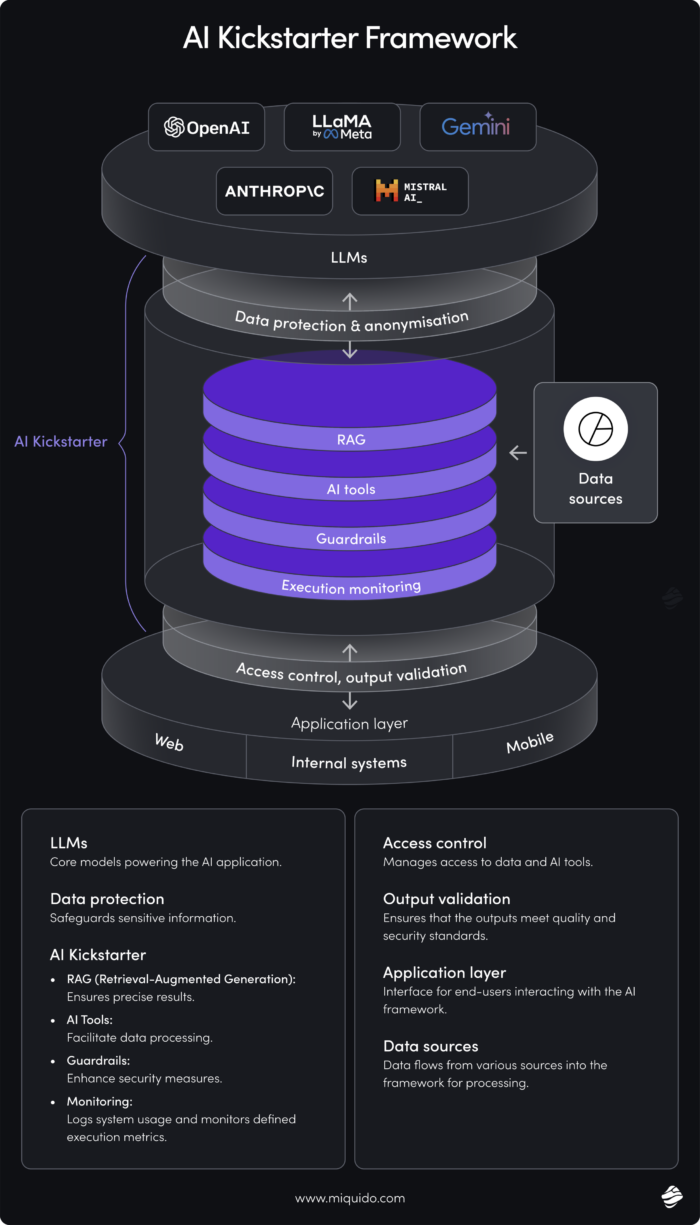

No worries – there are plenty of alternative frameworks that offer greater flexibility, efficiency, and scalability. In this article, we focus on frameworks designed for data integration and knowledge retrieval, such as DrAIve, LlamaIndex, txtai, and Haystack. These tools provide streamlined implementations, better control over LLM interactions, and more efficient resource usage – helping you build AI-powered applications without unnecessary overhead.

Now, let’s explore the best LangChain alternatives and find the right fit for your project.

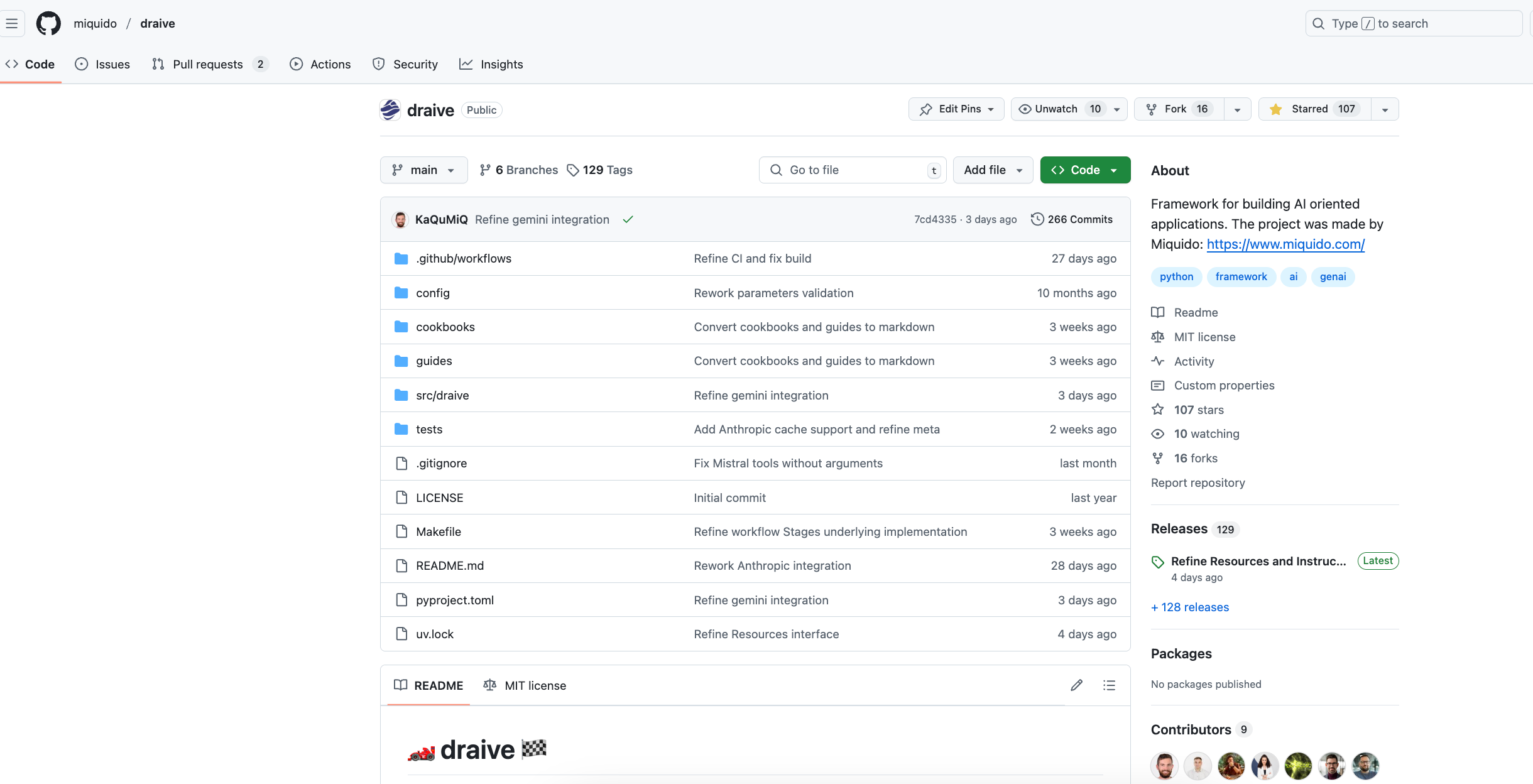

DrAIve (Miquido)

Developed by Miquido, DrAIve is a Langchain alternative designed to simplify LLM integration without the overhead that comes with LangChain’s modular design. It prioritizes flexibility, performance, and ease of scaling, making it easier for developers to:

- Dynamically switch between models without being locked into a specific ecosystem.

- Optimize response times by reducing unnecessary processing layers.

- Scale efficiently across cloud infrastructures without hitting performance bottlenecks.

For teams looking for a leaner, more adaptable solution, DrAIve offers a streamlined Langchain alternative that keeps development agile and efficient.

Key Features of DrAIve

1. Seamless Integration Across Multiple AI Models

One of DrAIve’s key strengths is its ability to integrate with multiple LLMs and switch between them dynamically to balance performance and cost. While LangChain supports different models, it doesn’t offer a smooth way to switch between them mid-task

DrAIve gives developers full flexibility – they’re not locked into a single provider and can choose the best model (GPT, Mistral, Gemini, Claude, etc.) for each specific use case.

2. High Performance Without Overhead

Unlike LangChain, which requires setting up chains, tools, memory, and agents even for simple tasks, DrAIve is designed to be lightweight and performance-focused. Its streamlined architecture removes unnecessary processing steps, reducing API call latency and simplifying infrastructure. As a result, applications built with DrAIve deliver faster response times and lower resource consumption compared to those using LangChain.

3. Robust Security and Compliance Features

Security is critical in AI development, especially for regulated industries. DrAIve offers customizable access control and optional data anonymization, making it a strong choice for businesses handling sensitive information or strict compliance requirements.

DrAIve incorporates advanced evaluation methods based on best practices and key metrics like groundedness, truthfulness, and relevance. These techniques help ensure that LLM-generated responses remain accurate, fact-based, and contextually appropriate, reducing the risk of AI hallucinations and misinformation.

DrAIve also features a built-in mechanism for effortless prompt generation and evaluation. Users can create prompts, automatically assess them with pre-defined evaluators, and refine them iteratively. By leveraging LLMs to optimize prompts, DrAIve ensures that final outputs meet all evaluation criteria – resulting in higher-quality AI responses and greater reliability in real-world applications.

4. Stability and Long-Term Reliability

LangChain’s frequent breaking changes often require developers to refactor their code, leading to increased maintenance costs. DrAIve, on the other hand, maintains a stable and backward-compatible API, reducing the risk of unexpected disruptions when upgrading to newer versions.

DrAIve vs. LangChain: A Comprehensive Comparison

LangChain and DrAIve take different approaches to integrating large language models into applications, each serving distinct needs. LangChain is a well-established framework with modular components for chaining LLM calls, managing memory, and supporting retrieval-augmented generation. While powerful, its complex abstractions can add unnecessary overhead, making even simple tasks more complicated than they need to be.

DrAIve, in contrast, is built for speed, flexibility, and efficiency. It eliminates many of LangChain’s inefficiencies, enabling seamless model switching, reducing latency, and simplifying integrations – all without locking developers into a rigid framework.

| Feature | DrAIve | LangChain |

| Ease of Use | Lightweight, minimal setup, optimized for simplicity | Modular but complex, requiring multiple components even for simple tasks |

| Performance | Optimized for speed with minimal processing overhead | Can introduce latency due to multiple layers of abstraction and excessive API calls |

| Model Switching | Dynamically switches between LLMs mid-task for cost and performance optimization | Supports multiple LLMs but lacks real-time switching during a single execution |

| Security & Compliance | Offers built-in access control, data anonymization, and compliance-ready features for regulated industries | No built-in security or compliance tools; relies on external integrations |

| Stability | Maintains a stable and backward-compatible API, reducing maintenance workload | Frequent breaking changes require constant refactoring and updates |

| Prompt Optimization | Includes built-in prompt generation, automatic evaluation, and iterative refinement using LLMs | Provides prompt templates but lacks an automated prompt evaluation or optimization system |

| Evaluation Metrics | Uses groundedness, truthfulness, and relevance to assess LLM responses for higher accuracy | No built-in evaluation framework for assessing response quality or accuracy |

For teams looking for a scalable, high-performance, and secure AI integration framework, DrAIve presents a compelling alternative to LangChain. It simplifies development without sacrificing flexibility or performance, making it an ideal choice for businesses that require stability, cost-efficiency, and compliance-ready AI solutions.

LlamaIndex

LlamaIndex is one of the leading alternatives to LangChain, especially popular among enterprises for its strong data retrieval and indexing capabilities. Designed to connect AI models with private structured and unstructured data, it enables fast, accurate, and scalable search – essential for businesses looking to enhance AI-driven insights.

Unlike LangChain, which focuses on building AI workflows, LlamaIndex is built for retrieval-based AI, making it a better fit for applications that require real-time data access and knowledge management.

Its lightweight, modular design integrates easily into existing systems without the added complexity of predefined AI chains. With comprehensive API documentation and strong support for retrieval-augmented generation, LlamaIndex is a popular choice for developers looking for high-performance, intuitive AI search tools.

How LlamaIndex optimizes AI-powered data retrieval

One of LlamaIndex’s biggest strengths is its ability to connect AI models with business data in real time, allowing AI-driven applications to instantly retrieve relevant information and provide more accurate responses. Effective data management is crucial for ensuring that AI-driven applications can retrieve relevant information and provide accurate responses. This makes it especially valuable for enterprises that rely on dynamic, up-to-date knowledge bases rather than pre-trained LLMs.

LlamaIndex supports multiple data sources, seamlessly integrating with databases, APIs, and document repositories. Whether businesses store information in structured SQL databases or unstructured formats like PDFs and internal wikis, LlamaIndex ensures efficient indexing and retrieval to maximize AI-powered insights. Its advanced search and indexing mechanisms further enhance performance, enabling fast, accurate, and context-aware query processing.

What sets LlamaIndex apart is its modular, lightweight design, making integration straightforward and highly adaptable.

Key use cases for LlamaIndex

Enterprise Knowledge Management – Companies with large knowledge bases use LlamaIndex to make internal documents easily searchable. This helps employees quickly find relevant information, boosting productivity and reducing time spent searching for data.

AI-Powered Customer Support – Businesses frequently integrate LlamaIndex into chatbots and virtual assistants to provide real-time, company-specific answers instead of generic or outdated responses. This enhances customer satisfaction and improves support efficiency.

Secure AI-Assisted Research in Regulated Industries – Healthcare, legal, and other regulated industries use LlamaIndex to retrieve critical case studies, compliance documents, and reports while ensuring data privacy and security.

LlamaIndex vs. LangChain: Feature Comparison

| Feature | LlamaIndex | LangChain |

|---|---|---|

| Specialization | Retrieval-based AI, optimized for search and knowledge management | AI workflow orchestration: chains, memory, and tool integrations |

| Ease of Use | Lightweight, modular, and easy to integrate | More complex; requires multiple components even for simple use cases |

| Performance | Fast, accurate data retrieval with minimal overhead | Can introduce latency due to additional processing layers |

| Integration | Direct, real-time connections to databases, APIs, and documents | Broader integration capabilities, but less optimized for data retrieval |

| RAG Support | Built for RAG; supports vector DBs like Pinecone, Weaviate, ChromaDB, FAISS | Supports RAG, but setup is more involved with added dependencies |

| Scalability | Scales easily for knowledge management and document retrieval | Scales for complex AI workflows, but infrastructure can become challenging |

| Documentation & Community | Clear, well-structured API docs; popular for search-focused use cases | Active community across AI domains; steeper learning curve |

| Security & Compliance | Secure by design for retrieval and privacy-compliant outputs | No native compliance; relies on third-party or custom implementations |

txtai

txtai is a powerful, all-in-one embedding database that streamlines semantic search, LLM orchestration, and complex AI workflows. Unlike LangChain, which relies on multiple components and integrations to achieve similar functionality, txtai consolidates vector indexing, graph networks, and relational databases into a single, lightweight solution.

Developers and enterprises looking for a flexible, self-contained Langchain alternative can leverage txtai’s efficient architecture to:

- Perform advanced semantic search with SQL-powered vector queries.

- Integrate seamlessly with multiple LLMs without requiring complex middleware.

- Handle multimodal data including text, audio, images, and video.

- Build and manage AI workflows using its built-in orchestration tools.

With support for Python and YAML-based configurations, txtai ensures ease of use while also offering API bindings for JavaScript, Java, Rust, and Go, making it a cross-platform solution adaptable to diverse tech stacks.

Key Features of txtai

1. Vector Search with SQL Integration

One of txtai’s standout features is its ability to combine traditional relational database queries with vector search, enabling structured and unstructured data retrieval in a single query. This is particularly useful for applications that require semantic search on large datasets while maintaining SQL-style filtering and ranking.

2. Multimodal Indexing for Text, Audio, Images, and Video

txtai extends beyond text-based retrieval by supporting multimodal indexing, allowing developers to embed and search across various data types, including audio, images, and video. This flexibility makes it an excellent choice for applications in fields like media analysis, voice search, and image-based AI workflows.

3. Language Model Pipelines for Various Natural Language Processing Tasks

txtai offers pre-configured NLP pipelines for tasks such as text classification, summarization, named entity recognition, and document similarity analysis. These pipelines simplify the process of integrating AI-powered features into applications, reducing the need for manual prompt engineering or chaining multiple API calls.

4. Workflow Orchestration for Complex AI Processes

txtai includes a built-in workflow orchestration engine, allowing developers to automate AI processes and integrate multiple models efficiently, leveraging natural language processing capabilities. Whether it’s retrieval-augmented generation, document processing, or multi-step AI agentic workflows, txtai provides a declarative way to define and execute complex pipelines.

txtai vs. LangChain: A Comprehensive Comparison

| Feature | txtai | LangChain |

| Ease of Use | Simple, self-contained, minimal setup | Modular but complex, requiring multiple components |

| Vector Search | Built-in vector search with SQL filtering | Requires external vector database integrations |

| Multimodal Support | Supports text, audio, image, and video indexing | Primarily text-based, multimodal support requires extra dependencies |

| LLM Orchestration | Direct LLM integration with workflow automation | Chaining-based approach, requiring extra components for workflow execution |

| NLP Pipelines | Built-in pipelines for classification, summarization, NER, etc. | Requires separate setup for NLP tasks |

| Scalability | Lightweight and efficient, runs locally or in cloud environments | Scalable but dependent on external infrastructure |

| Security & Compliance | Can be deployed on-premise for full data control | Mostly relies on cloud-based APIs |

| Cross-Language Support | API bindings for JavaScript, Java, Rust, and Go | Primarily Python-based, requiring custom implementations for other languages |

| Stability | Stable, backward-compatible API with minimal breaking changes | Frequent updates and breaking changes requiring maintenance |

| Pricing | Free and open-source | Open-source, but can lead to high API costs due to inefficiencies |

Haystack

Haystack is a powerful, open-source framework designed to help developers build scalable and production-ready AI applications with ease. Whether you’re working on chatbots, intelligent search solutions, or retrieval-augmented generation applications, Haystack provides the necessary tools to streamline development.

Unlike LangChain, which often requires piecing together multiple components to achieve full functionality, Haystack offers a well-structured, modular approach that includes pre-built pipelines, retrieval mechanisms, and integrations with various LLM providers.

With extensive documentation, tutorials, and an active developer community, Haystack is an excellent choice for both junior and experienced engineers looking to build robust LLM-based applications without unnecessary complexity.

Key Features of Haystack

1. Modular Architecture with Customizable Components

Haystack follows a modular design, allowing developers to customize their AI pipelines to efficiently retrieve relevant data according to their needs. Its plug-and-play approach lets you combine various retrieval, generation, and indexing techniques without being locked into a rigid framework.

This is particularly beneficial for teams looking to experiment with different retrieval strategies, model providers, and storage solutions.

2. Support for Multiple Model Providers

Haystack seamlessly integrates with multiple model providers, including:

- Hugging Face Transformers (for open-source LLMs),

- OpenAI’s GPT models,

- Cohere,

- And other third-party AI services.

Unlike LangChain, which sometimes requires additional setup and middleware to switch between models, Haystack natively supports multiple providers, making it easy to adapt to changing requirements or optimize for cost and performance.

3. Integration with Document Stores and Vector Databases

Haystack allows for flexible data storage by integrating with various:

- Document stores (e.g., Elasticsearch, Weaviate, FAISS, Milvus),

- Vector databases for efficient embedding-based retrieval,

- Relational databases for structured queries.

In contrast, LangChain often relies on third-party integrations, which can introduce complexity and require additional configuration. Haystack simplifies the process by offering native support for diverse storage solutions out of the box.

4. Advanced Retrieval Techniques for High-Quality Context

One of Haystack’s standout features is its support for Hypothetical Document Embeddings (HyDE)—an advanced retrieval technique that improves contextual accuracy for large language models (LLMs) prompts.

By generating synthetic documents that better represent the user’s query, HyDE helps enhance search relevance and minimize the risk of hallucinated responses from LLMs.

LangChain provides retrieval mechanisms, but they lack the built-in sophistication of Haystack’s HyDE retrieval, which can be a game-changer for applications requiring precise and context-rich AI-generated responses.

Haystack vs. LangChain: A Comprehensive Comparison

| Feature | Haystack | LangChain |

| Ease of Use | Modular, with built-in components and flexible pipelines | Requires assembling multiple components manually |

| Model Support | Hugging Face, OpenAI, Cohere, and other providers | Supports multiple models but requires additional integration for switching |

| Retrieval Techniques | Advanced retrieval (e.g., HyDE) for better context | Standard retrieval methods, requiring custom implementation for advanced techniques |

| Vector & Document Storage | Built-in support for Elasticsearch, FAISS, Weaviate, Milvus, etc. | Requires additional integrations for document and vector storage |

| Scalability | Designed for production workloads with efficient indexing and retrieval | Can introduce performance overhead with complex chaining mechanisms |

| Security & Compliance | On-premise deployment options for full data control | Often reliant on cloud-based APIs, leading to potential compliance challenges |

| Pipeline Flexibility | Fully modular, with easy-to-configure NLP pipelines | Requires chaining multiple tools together, leading to added complexity |

| Community & Documentation | Strong open-source community with detailed tutorials | Large community but documentation can be fragmented |

| Pricing | Free and open-source | Open-source but may lead to high API costs due to inefficiencies |

Comparing LangChain Alternatives

LangChain is a popular framework for integrating large language models into applications, but its complexity, scalability issues, and high resource usage often push developers to look for better alternatives. Frameworks like DrAIve, LlamaIndex, txtai, and Haystack offer more efficient solutions, each designed for specific AI use cases.

Whether you need lightweight model management, real-time data retrieval, or optimized retrieval-augmented generation, these alternatives provide better performance, flexibility, and cost savings compared to LangChain’s rigid structure.

For AI-powered search and knowledge retrieval, LlamaIndex and Haystack stand out by connecting directly with databases, APIs, and vector stores—delivering faster, more accurate search results. txtai, on the other hand, is a compact embedding database with multimodal support, making it ideal for applications involving text, audio, images, and video.

If you’re looking for easy model switching, strong security, and minimal infrastructure setup, DrAIve is a great option, offering high performance and stability without the complexity of LangChain’s chained workflows.

Below is a comparison of key features and use cases to help you decide.

Comparison of Features & Use Cases

| Feature | DrAIve | LlamaIndex | txtai | Haystack | LangChain |

| Best For | LLM integration & orchestration, RAG | AI-powered search & knowledge retrieval | Embedding database & multimodal AI | RAG-based search & chatbots | General-purpose AI chaining |

| Ease of Use | Lightweight, minimal setup | Modular, works with structured & unstructured data | Self-contained, easy to configure | Pre-built pipelines for search applications | Requires chaining multiple components |

| Performance | Optimized for speed, minimal overhead | Fast indexing & retrieval | Low-latency vector search | Advanced retrieval with high accuracy | Can slow down due to excessive chaining |

| Scalability | Automated scaling (IaC) | Scales well for search-heavy apps | Scales efficiently across data types | Designed for large-scale search & chatbots | Needs manual tuning |

| Security & Compliance | Built-in access control, evaluators & data anonymization | Secure retrieval for enterprises | Can run on-premise for data control | Supports both on-premise & cloud | No built-in security, relies on third-party tools |

| Stability | Backward-compatible, minimal breaking changes | Stable API, widely used in enterprises | Lightweight, well-maintained | Actively maintained, production-ready | Frequent updates requiring refactoring |

| Pricing | Free & open-source | Free & open-source | Free & open-source | Free & open-source | Free & open-source |

Why DrAIve Is a Strong Langchain Alternative

If you need a framework that is fast, reliable, and easy to work with, DrAIve is one of the best alternatives to LangChain. Unlike LangChain, which forces developers into a complex workflow, DrAIve keeps things simple by removing unnecessary processing steps and allowing dynamic model switching to control performance and costs.

For retrieval-based AI applications, LlamaIndex and Haystack are great choices, but DrAIve offers more flexibility, making it ideal for businesses needing both RAG and smooth LLM orchestration. It also provides better security and compliance, with built-in access control and data anonymization, making it a strong option for regulated industries and enterprise AI projects.

If you want AI-powered applications that are scalable, cost-effective, and easy to maintain, DrAIve is a top choice – offering a better balance of performance, efficiency, and simplicity than LangChain.

![[header] best 5 langchain alternatives for your ai projects](https://www.miquido.com/wp-content/uploads/2025/02/header-best-5-langchain-alternatives-for-your-ai-projects.jpg)