Security breaches are some of the worst consequences of neglecting legacy code. Take the WordPress malware attack between 2022 and 2023, where hackers exploited poorly structured advertising code on 14,000 sites and infected users worldwide.

This shows that neglecting legacy code is a risk no business can afford. But security isn’t the only concern.

Legacy code also leads to compatibility issues with modern operating systems and browsers, performance bottlenecks due to inefficient computations, and non-compliance with regulatory standards in sectors like finance, healthcare, and government.

This means refactoring legacy code is necessary if companies want to ensure their software remains secure, works as intended, and functions in the future. Let’s learn how you can do that. One crucial step is to extract logic code to preserve existing functionalities while updating the application with modern frameworks and simpler methodologies.

Understanding legacy code challenges

While all code will eventually become legacy at one point, with consistent testing and framework/language updates, some of it can be easily maintainable. That’s not the case with code that hasn’t been maintained—and with each version change, it becomes even more difficult to manage.

Maintaining code quality through good documentation, CI/CD pipelines, and regular code reviews is essential for providing feedback, ensuring adherence to best practices, and contributing to overall code improvement. Below, we will go through three of the most common challenges of legacy systems and code.

1. Lack of documentation

Legacy code often doesn’t have any detailed documentation, which makes understanding the code’s logic, data flow, and dependencies difficult. This may be because of a lack of access to the original developers, outdated documentation, misinformation, or duplicate code.

As a result, developers need to perform extensive code analysis or reverse engineering to figure out how the system works. In fact, companies doing legacy code refactoring spend 50% of their total time trying to understand the code first.

2. Technical debt

Technical debt is the implied cost of additional rework caused by choosing an easy solution now instead of a better approach that would take longer. This can cause your code to have code smells like duplicated code, large classes, or long methods.

Over time, patches and hotfixes also get stacked on top of each other—as everything that breaks gets fixed quickly. This misses the underlying architectural issues, which makes it harder to modify the system.

Regardless of the cause, too much technical debt increases the complexity of your codebase, makes it more prone to error, and is very expensive to refactor or rewrite.

3. Outdated practices

Legacy systems usually rely on technologies, frameworks, or programming languages that have become obsolete or have fallen out of fashion. For instance, they may use unsupported libraries or frameworks that now create security risks and compatibility issues with newer systems.

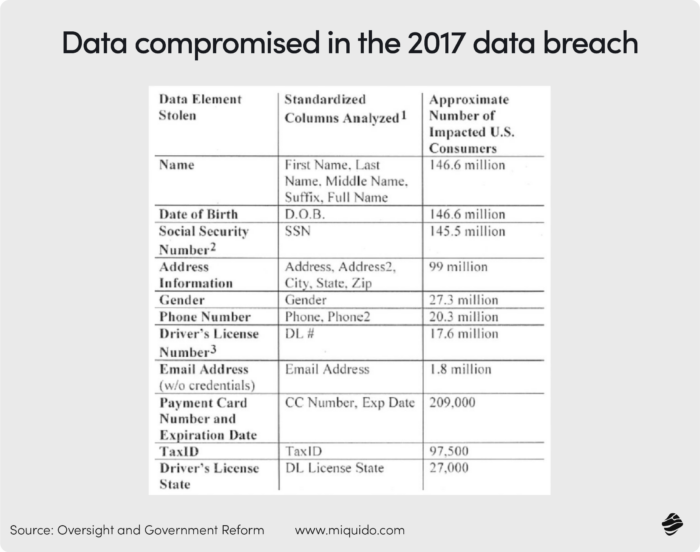

This actually happened to Equifax in 2017, where hackers used a security hole in the company’s computer system to compromise the data of 148 million people. Here’s some of the data that was leaked:

The Committee on Oversight and Government Reform ruled that this was partly due to the legacy code on the company’s website. While the company was actively making reforms to its legacy code, it wasn’t fast enough to detect the breach because it didn’t have the right systems in place.

This could be because of difficulty finding talent to maintain or upgrade the system, lack of compatibility with other systems, or incompatibility with IDEs, version control systems, and CI/CD pipelines.

Importance of refactoring legacy code

Security breaches are one of the most dangerous consequences of not updating legacy code. This means legacy code refactoring should be on top of your priority list.

Refactoring code involves restructuring existing code without changing its external behavior. Let’s break down why it’s so important:

1. Improved performance

Outdated software often has vulnerabilities that may or may not be discovered and could be exploited over time. For instance, it could have unpatched security flaws and may not be compatible with modern encryption standards.

Refactoring this code will make it cleaner, more organized, and easier to understand. This, in turn, makes it easier for developers to read, modify, and extend the codebase, which reduces the time and effort required for future maintenance.

2. Easier maintenance

Legacy code is complicated, difficult to understand, and sometimes fragile because it may have too many layers. Refactoring code breaks this complex, monolithic code down into smaller, manageable modules or functions.

This makes it easier for developers to adopt modern coding conventions, debug the code, and make changes to the codebase. Maintenance and troubleshooting also become less expensive.

3. Reduced technical debt

Accumulated technical debt like a monolithic codebase, outdated dependencies, and anti-patterns from quick fixes and outdated practices cripple development efforts. This makes the codebase increasingly complex, error-prone, and difficult to maintain or extend.

Refactoring legacy code helps companies overcome this technical debt in several ways:

- It breaks down large methods and classes into smaller components to reduce complexity

- It removes duplicated and unused code segments that create confusion

- It organizes code in layers to ensure maintainability

- It moves to supported versions of frameworks and libraries to improve security

This means that developers spend less time deciphering convoluted code and more time adding value through new features. This frees up resources and positions the organization for sustainable growth.

4. Better scalability

Scalability is the capacity of a system to handle an increasing amount of work or its potential to accommodate growth without compromising performance.

Since legacy systems often have monolithic architectures where components are tightly coupled, it becomes difficult to scale parts of the system. This causes performance bottlenecks, system instability, and an inability to meet growing user demands.

5. Increased security

Security is one of the main reasons for legacy app modernization. When code isn’t regularly maintained and refactored, it becomes a fertile ground for security breaches that can compromise sensitive data and leak user information.

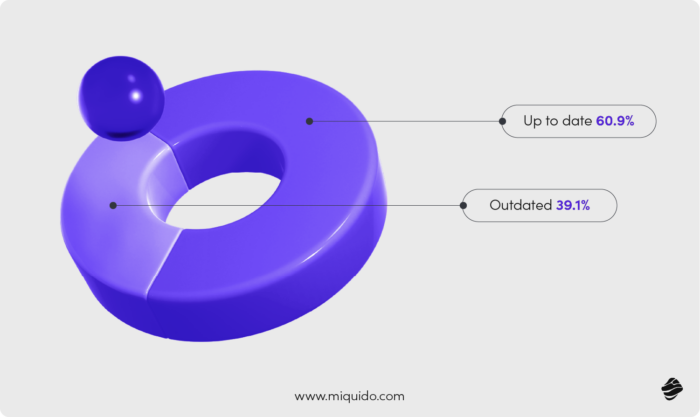

For instance, in 2023, 39.1% of content management system (CMS) applications were outdated at the point of infection.

Legacy code usually contains vulnerabilities that have been publicly disclosed and are well-known to attackers. Refactoring updates or replaces these insecure components, patches security holes, and ensures that all dependencies are up-to-date.

It also reduces the attack surface through code modularization, which makes it more difficult for attackers to find and exploit vulnerabilities within the system.

5 Key steps in the legacy code refactoring process

Here’s the process for refactoring legacy code:

1. Understand your codebase

You first need to understand the architecture, dependencies, and existing issues to understand whether software refactoring vs. rewriting is the right choice for your legacy system.

If you choose refactoring, here’s what to do:

- Visualize the system’s structure, data flow, and dependencies to see how different components interact. You can do this through diagrams and models

- Figure out which parts of the code are necessary to business operations or are frequently modified

- Evaluate the code for complexity, redundancy, and potential issues

You also need to document all external libraries, frameworks, and services your code relies on. This will help you plan updates in the next step.

2. Plan how you’ll refactor legacy code

Next, create a clear roadmap for the refactoring process. You can start by setting a clear and measurable goal for what you’re looking to achieve, such as reducing codebase size, improving security, or increasing performance.

After you’ve established an overarching goal, rank modules or features based on their criticality, complexity, and impact on other systems. Also, you need to decide whether you want to perform a complete overhaul or implement incremental improvements at this point.

This will help you understand which tasks to focus on first. Once that’s done, create a roadmap outlining phases, timelines, milestones, and deliverables, then get it approved.

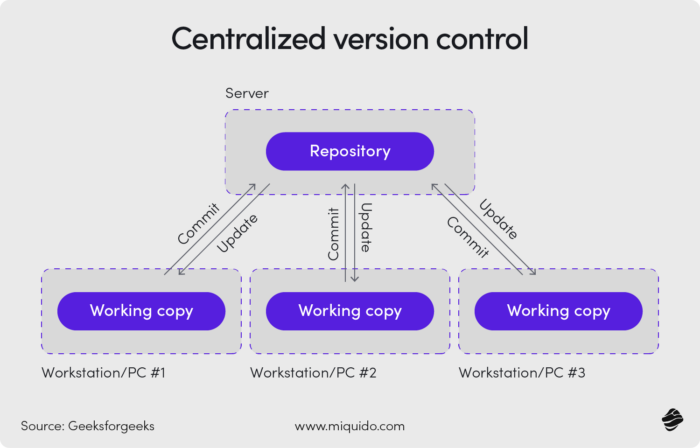

3. Set up a version control system

A version control system ensures that every change is documented and reversible. It maintains a history of changes—which prevents disruptions to the live system.

Just make sure to create a branching strategy that defines exactly how to manage each stream of work, such as bug fixes and refactoring efforts, to prevent people from working on the same thing.

4. Set up a testing baseline

Once a version control system is set up, you need to make sure you have a testing baseline that ensures that the existing functionality remains intact and that the refactoring process doesn’t introduce new issues. Here’s what you can do:

- Create unit tests for individual functions, integration tests for modules working together, functional tests against requirements, and regression tests to catch unintended changes

- Implement automated tests into your development process so they can check for issues that you didn’t see

You should also plan for load testing to figure out how the system performs under stress and security testing to find vulnerabilities and deal with them before they become doors to threats.

5. Implement refactoring

At this point, you can start making changes to the codebase. Incremental changes—where you tackle the code in small, manageable sections—are best at first because they have lower risk, are easier to track, and help you identify issues quickly.

While you’re doing that, make sure that each change you make doesn’t disrupt the current function of the system. You want to avoid introducing new bugs or affecting your system’s functionality.

Once that’s done, you can update the system, replace outdated code, and lay the groundwork for future maintenance.

7 Code assessment and analysis techniques for legacy code refactoring

Here are seven methods to help you assess code quality, identify bottlenecks, and figure out where legacy code refactoring will be most impactful:

1. Syntax analysis

Syntax analysis focuses on identifying syntax errors and ensuring compliance with coding standards. Some common issues include missing brackets, invalid variable names, and poor indentation.

Modern integrated development environments (IDEs) feature built-in syntax analysis tools. For instance, Visual Studio Code uses IntelliSense to flag issues. This helps developers spot errors early during the refactoring process—often before running their code.

2. Data and control flow analysis

Data flow analysis tracks the movement of data throughout a program and identifies problems like uninitialized variables, null pointers, or data race conditions. Control flow analysis checks for bugs like infinite loops and unreachable code.

Modern compilers often come with these techniques because they flag issues as warnings or errors during compilation. For example, the Clang compiler for C-family languages automatically conducts flow analysis.

For other languages like Python, tools like CodeQL help with manual data and control flow analysis.

3. Security analysis

Security analysis involves examining code for vulnerabilities like buffer overflows, injection flaws, and cross-site scripting risks. It also scans third-party libraries for known vulnerabilities and flags sensitive information accidentally included in source code.

Some popular static application security testing (SAST) tools include:

- Snyk Code

- Microsoft CredScan

- GitHub Advanced Security

4. Unit tests

Unit tests focus on testing individual components of the code in isolation and verify that each unit performs as expected under various conditions. They are the foundation of your testing strategy because they help ensure each “building block” of your software works correctly.

These tests are usually written by developers and run frequently during refactoring to catch bugs. Some popular frameworks for performing these tests include JUnit, pytest, xUnit, and Jest.

5. Integration tests

These tests validate that multiple units or modules work together as expected. They ensure that the interfaces between components or external systems are properly implemented. Developers usually:

- Test two integrated modules, then expand to larger groups

- Use mock servers or test doubles to avoid dependencies

- Validate database connections, queries, and scheme changes

Some tools for these tests include WireMock, Spring Boot Test, and Selenium WebDriver.

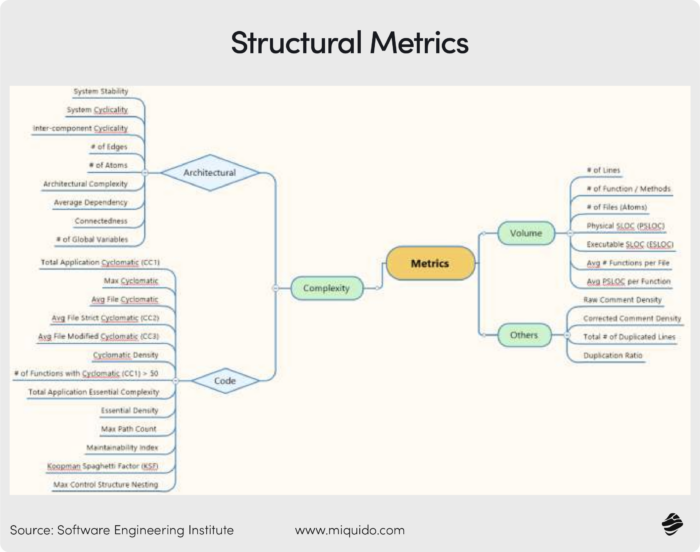

6. Metric-based analysis

Metric-based analysis uses quantitative measures or metrics to evaluate the complexity, maintainability, and quality of the existing codebase. These metrics include cyclomatic complexity, code churn, and coupling between classes or modules.

High complexity or excessive dependencies indicate areas that are error-prone or hard to maintain. Some tools that help with this include CodeClimate and SonarQube.

7. Acceptance tests

Acceptance tests validate that the software meets user needs and business objectives while ensuring all functional requirements are correctly implemented. It also works as the final checkpoint for deployment readiness.

For example, when testing a hospital management system, you might verify that doctors can access patient records, nurses can update treatment plans, and administrators can generate billing reports.

How to set objectives and prioritize refactoring tasks

If you want to refactor legacy code so it works as it should, you need to set clear objectives that will deliver the most value. Here’s how to do that:

1. Understand why you want to refactor

Before getting into refactoring, take a step back and ask yourself: What are you trying to achieve?

Is your team bogged down by slow development cycles because the codebase is too tangled? Are you facing frequent bugs that are hard to track and resolve? Or maybe upcoming features require a more scalable and modular architecture?

You need to figure out your long-term and short-term goals. Here’s what they’d look like:

- Short-term—Fixing bugs, speeding up a performance bottleneck, etc.

- Long-term—Reducing technical debt across the board, restructuring the codebase, etc.

Clear goals act as your roadmap, align your efforts with tangible goals that support the project’s bigger picture and your decisions, and prevent unnecessary work.

Just make sure to avoid vague goals like “make the code better.” Instead, set specific and actionable goals like “reduce cyclomatic complexity in the authentication module to improve testability” or “refactor API endpoints to standardize error handling.” These are more achievable.

2. Identify high-impact areas

Once you know the “why” behind the refactoring process, focus on high-impact areas that will deliver the biggest bang for your buck. These are the areas where improvements will make the most noticeable difference.

Here are some signs of these areas:

- Frequent changes—Is there a module or function your team touches constantly? Code that changes often should be easy to modify without breaking everything else.

- High complexity—Functions or classes with deeply nested logic or excessive branching can be time bombs waiting to go off. You should work on these to reduce errors.

- Low test coverage—If a very important part of your application lacks tests, refactoring will help you add the necessary scaffolding to improve reliability.

- Critical paths—These are sections that directly impact user experience like authentication systems, checkout workflows, or performance-critical APIs.

Tools like SonarQube, CodeClimate, or static code analyzers can find code smells, hotspots, and areas with too much complexity.

3. Prioritize tasks

Once you’ve identified tasks for refactoring, prioritize them based on a combination of risk, effort, and reward:

- Quick wins—Are there fixes that require minimal effort but will deliver noticeable improvements (like redundant code and formatting issues)? Start there to build momentum.

- Mission-critical code—These are areas where failure would have the biggest consequences, like payment gateways or core business logic.

- Complex but essential—These sections might require a lot of refactoring but are necessary to your system functionality, such as breaking a monolith into microservices.

This will help prevent refactoring from becoming an endless cycle and ensure your development team stays focused.

Strategies for minimizing risks when refactoring code

While refactoring is an investment in your codebase’s health, it comes with potential pitfalls—bugs, downtime, or inadvertently breaking something critical. Let’s figure out how you can minimize the risk of these popping up:

1. Make incremental changes

Avoid the temptation to refactor an entire system in one go. Instead, you should divide the work into smaller, incremental tasks that can be implemented and tested independently. Here’s what to do:

- Refactor a single function or class before moving to larger modules

- Test after each incremental change to quickly identify and fix issues

- Use version control systems like Git to revert changes if something goes wrong

Smaller changes are easier to review, test, and debug—and they reduce the risk of introducing new bugs into the code.

2. Perform comprehensive testing on refactored code

Comprehensive testing is your safety net during refactoring because it catches problems before they escalate and ensures your refactored code behaves as expected.

For instance, unit tests focus on the smallest pieces of functionality and ensure that isolated pieces of code still work after changes. So, if you’re refactoring a calculateTax() function, unit tests ensure it still produces correct results for all scenarios, from edge cases to typical inputs.

Similarly, integration tests confirm that these interactions remain intact when you refactor code that interacts with other components—like an API that talks to a database.

Pro tip: Tools like JUnit, pytest, and Selenium automate much of the testing process, making it easier to verify your changes repeatedly and consistently.

3. Prioritize backward compatibility

When refactoring components with external dependencies—like APIs or libraries—breaking changes can ripple through your system and impact users. To make sure this doesn’t happen, you need to maintain backward compatibility wherever possible.

For example, if you’re refactoring an API, use versioning or deprecation warnings for old endpoints. This gives your users or clients time to adapt while you roll out changes gradually.

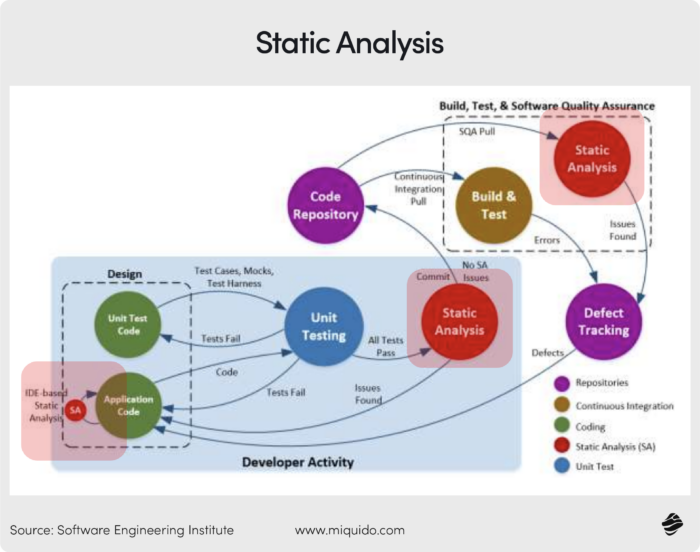

4. Use tools to find issues

Static code analysis tools can help you automatically examine your codebase and identify issues that may not be immediately visible like complex logic, unused variables, or deviations from coding standards.

For instance, a linter like ESLint for JavaScript checks if variables are defined but never used. This makes for cleaner and more maintainable code. They can also flag hidden bugs that might lead to null pointer exceptions or code paths that are never executed.

5. Validate in staging before production

Refactored code should never go straight to production. A staging environment helps here because it lets you simulate real-world scenarios and gives you a safety zone to test your changes before users interact with them.

This helps you reduce the likelihood of downtime caused by unforeseen issues, enables you to run load tests and validate performance under realistic conditions, and makes sure that refactored code interacts as it should with existing systems.

Managing dependencies and external integrations

Managing dependencies and external integrations is a crucial aspect of refactoring legacy code. As systems scale, the complexity of dependencies and the need for thorough testing become more pronounced. Here are some key considerations for managing dependencies and external integrations:

Tools for legacy code refactoring

Refactoring tools help you analyze your codebase, automate repetitive tasks, and make sure changes don’t introduce bugs. Let’s look at some of the most popular:

1. Static analysis tools

Static analysis tools inspect the codebase without executing it. They identify areas that need improvement, such as code smells, complexity issues, or potential bugs. Here are some of the best:

- SonarQube

- CodeClimate

- ESLint/Flake8/Pylint

2. Automated testing suites

Automated testing tools help you create, execute, and manage tests to ensure your refactored code behaves as expected. They include:

- JUnit/TestNG

- Pytest

- Selenium

3. Code coverage tools

Code coverage tools measure how much of your code is tested by automated tests. They are especially useful for identifying untested areas in legacy code that need coverage before refactoring. They include the following:

- JaCoCo

- Coverage.py

- Istanbul

4. Dependency management tools

Legacy code often relies on outdated programming practices and vulnerable dependencies. These dependencies can be a major source of technical debt, so tackling them during refactoring improves security and stability.

Dependency management tools identify issues in your dependency tree and recommend updates or replacements. They include:

- Snyk

- Dependabot

- Maven/Gradle Plugins

5. Version control systems

Version control systems are a must during refactoring because they help you track changes, revert to earlier versions if needed, and collaborate with team members. They act as a safety net during refactoring.

They include:

- Git/GitHub/GitLab

- Bitbucket (for teams using Jira)

Testing and quality assurance post-refactoring

Refactoring essentially changes the structure of your code, so you need to perform testing to verify that these changes have not introduced bugs or made performance worse—especially when working with legacy systems or production-critical code.

Without testing, changes made during refactoring could cause unintended side effects like logic errors, data inconsistencies, or performance regressions—and could compromise the very systems they’re looking to improve.

Testing makes sure that the application functions as expected. Here’s what it usually includes:

- Regression and integration tests to check that no existing features are broken

- Unit testing to ensure that refactored components work as intended

- Performance testing to make sure that refactoring doesn’t introduce bottlenecks

- Post-deployment testing to find any issues that slipped through

Testing also enforces quality standards. Code coverage tools identify untested areas of the refactored codebase. Automated testing frameworks like JUnit, pytest, or Selenium make this process easier and help teams validate their work quickly.

Monitoring performance and gathering feedback

Monitoring performance and gathering feedback are essential steps in the refactoring process. These activities help ensure that the refactored code meets performance expectations and aligns with user needs. Here are some key considerations for monitoring performance and gathering feedback:

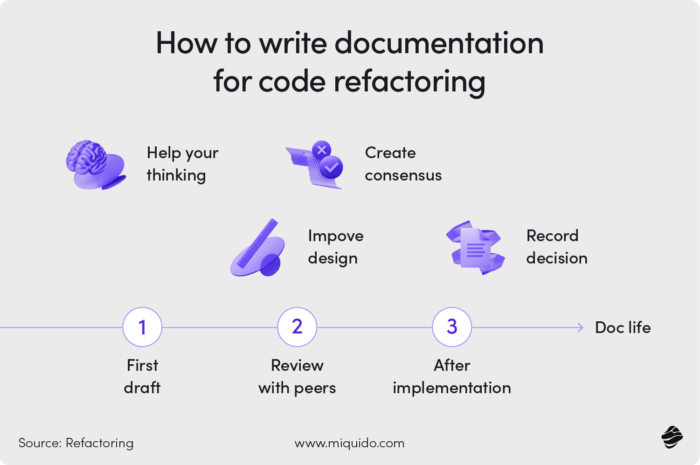

Documenting refactoring efforts

When you refactor legacy code, you make the system work better through changes across class hierarchies, function signatures, or modular dependencies. But you need to keep track of what you’re doing.

Why? To make sure that anyone making changes or adding new features in the future (even if it’s you) understands what changes were made, why they were made, and how the refactored code should be used. If you don’t do this, you might end up back to square one and make it difficult for other developers to maintain or debug the code down the line.

Documentation also acts as a shared reference point for development teams, ensuring everyone understands the current state of the codebase. This is necessary for teams with high turnover or those working on complex systems.

Some ways you could track your refactoring efforts include using:

- Version control tools like Git

- Tools to automate the creation of technical documents based on code comments

- Centralized platforms like Confluence, Notion, or GitHub Wiki to store and organize all documentation

Once you have your documentation on hand, make sure it mentions exactly what you did and what the codebase looks like—before and after the implementation. This will make sure there are no regressions or misunderstandings later on.

In closing

Legacy systems accumulate technical debt, which complicates updates, increases development time, and makes deploying new features more expensive.

Refactoring or digital transformation consulting reduces these inefficiencies, breaks down code monoliths, and supports future scalability—when done right. But when it isn’t, refactoring can compromise the very systems a company is looking to improve.