For a few years, law has struggled to keep up with the rapid progression and adoption of AI. However, the regulatory wild west period has come to an end—at least in the European Union—with the introduction of the EU AI Act. Recognizing the significance of this milestone, Miquido's ninth edition of the AI Waves webinar was dedicated solely to the AI Act, gathering experts specializing in the area.

Among the invited guests, we had Cornelia Kutterer, Senior Research Fellow at the University of Grenoble and the Managing Director at Considerati, who brought her invaluable expertise in legal compliance, AI governance, and public policy. Paula Skrzypecka, a Senior Associate at Creative Legal with a PhD in Law, joined the conversation as an expert in tech law and GDPR compliance.

Rounding out the panel was Dr. Ansgar Koene, a global AI ethics and regulatory leader at EY, whose background in electrical engineering and computational neuroscience brings a unique perspective to the conversation.

As with every edition of AI Waves, a series of webinars dedicated to AI, the conversation was led by Jerzy Biernacki. As Chief AI Officer at Miquido, he combines his academic knowledge (PhD in Computer Science) with years of practical experience to help companies crack their toughest problems and grow their business with tailored AI development services.

EU AI Act Explained: Key Questions Addressed

What obligations does the long-awaited AI Act place on companies? What good practices should you implement for your AI and Generative AI use cases to comply with its requirements? What exactly should you do once the AI Act comes into power, and how much time do you have? Is this the final shape of the regulations? The experts addressed these and other questions relevant to entrepreneurs and professionals working directly with AI. Dive into our EU AI Act overview to discover the answers!

What is the AI Act and What Are Its Goals?

The AI Act is a groundbreaking piece of legislation designed to regulate artificial intelligence (AI) across various sectors within the European Union. As the first comprehensive AI regulation globally, it sets forth a series of requirements and compliance measures that businesses must adhere to, ensuring that AI technologies are developed and used responsibly.

The primary goal of the AI Act is to build public confidence in AI technologies and encourage their adoption in a safe and beneficial manner. It aims to establish clear rules and standards that promote transparency, accountability, and human oversight, addressing key concerns about the impact of AI on safety, security, and fundamental rights.

While its name might suggest otherwise, the Act does not attempt to regulate all aspects of AI. It focuses on critical areas where AI systems could potentially affect individuals within the EU, such as safety and security concerns.

The Risk Categories Defined by the AI Act

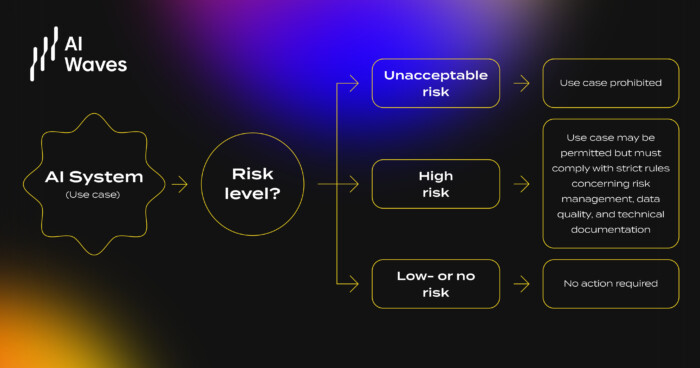

Categorization of risk is the foundation of the AI Act, enabling the stratification of measures and obligations. The Act distinguishes four categories, from the highest to the lowest risk:

Prohibited AI Systems in the EU AI Act

The EU bans several types of AI systems under the AI Act to protect fundamental rights and prevent harm. Prohibited systems include those using subliminal manipulation to influence behavior, exploiting vulnerabilities based on personal traits, and biometric categorization by sensitive characteristics like ethnicity or religion.

General-purpose social scoring, real-time remote biometric identification in public spaces (with limited exceptions), emotion recognition at work or in education, predictive policing, and the scraping of facial images without consent are also banned.

High-Risk AI Systems in the EU AI Act

For high-risk systems, companies must implement mechanisms, procedures, and assessments to ensure that they won't misbehave. This category includes systems involved in critical infrastructure, education, or healthcare, with a broad range. For instance, both AI supporting medical scanning devices and software powering digital courses could be classified as high-risk.

Limited-Risk AI Systems in the EU AI Act

Limited-risk systems do not require human oversight; instead, their creators and deployers must ensure transparency. People must understand if they're interacting with an AI system. This category includes chatbots and deepfakes, being predominantly linked to risks of generative AI in business.

Low-Risk AI Systems in the EU AI Act

For low-risk systems, there are no specific obligations for any party involved in their implementation. However, as Dr. Ansgar Koene underlines, the EU recommends having voluntary codes of conduct around them.

Obligations Related to the EU AI Act for Each Category

Under the EU AI Act, providers, importers, and deployers of AI systems have specific obligations to ensure safety and compliance.

Pre-deployment obligations include establishing a robust risk management system, using appropriate datasets, and ensuring the robustness and accuracy of AI systems.

Post-deployment monitoring is required to continuously assess the performance and impact of the deployed AI systems.

To further ensure compliance, all personnel involved with AI systems must possess adequate AI literacy, enabling them to understand and manage the technology effectively.

Where is the EU AI Act in Power and Who Should Comply?

The AI Act takes a similar approach to GDPR, meaning it applies regardless of where the creator or deployer of the AI system is based. It is relevant if your system impacts EU citizens, even if it is not primarily targeted at users in the EU.

Consequences of Not Complying with the EU AI Act

Negligence of EU AI Act compliance and violating prohibitions can result in fines—up to 7% of global annual turnover, or 35 million euros. The final sum depends on the category of the systems and the gravity of the non-compliance.

EU AI Act Overview: Impact on Businesses

The EU AI Act introduces a comprehensive regulatory framework for AI across various sectors. Companies must evaluate their position within the AI value chain to determine their specific obligations, which can vary widely depending on their role as providers, importers, or deployers of AI systems. This can be complex, as roles may overlap or evolve.

According to Cornelia Kutterer, with the rise of AI compliance tools, adhering to these regulations will become more manageable. However, organizations must remain agile and vigilant, continually monitoring changes in the regulatory landscape. The Act's guidelines will undergo ongoing regulatory review and adjustments, making it crucial for businesses to stay informed and flexible.

EU AI Act Overview: Detailed Timeline 2024-2028

By the time the webinar took place, the exact dates for the EU AI Act were not yet defined. Paula Skrzypecka provided a detailed outline of the expected timeline. Now that we know the exact date of its entry into force, we add exact dates that every company should be conscious of.

- Entry into Force and Start of Application: 2nd of August 2024

- Bans on AI Systems with Unacceptable Risks Become Applicable: 2nd of February 2025

- Codes of Conduct for General-Purpose AI Models Expected to be Ready: 2nd of May 2025

- Regulations for General-Purpose AI Models Come into Effect: 2nd of August 2025

- European Commission to Issue Implementing Acts on Post-Market Monitoring: 2nd of August 2026

- Obligations for High-Risk Systems Listed in Annex 3 Take Effect: 2nd of August 2027

- Obligations for High-Risk Systems Outside Annex 3, Used as Product Safety Components, Take Effect: 2nd of August 2028

Practical Steps for Compliance with the EU AI Act

We already know the obligations and requirements that the EU AI Act brings to the table. But what does it mean in practice? Here are the steps you need to take to guarantee compliance:

AI Inventory

Identify AI usage within your organization, whether it is dedicated tools developed and maintained by the company, software as a service or open-source tools. Define the areas that it is applied for and try to understand whether they are linked to vulnerable areas - for instance, does it involve customer data processing and what kind of data. In each of this Ai use case, define your company’s role in a value chain.

Risk Assessment

Determine risk levels of AI systems. The technical features of your AI system do not really matter. What matters is the way you are using AI—whether it has a direct impact on human decisions, what kind of decisions it impacts, what kind of output you receive, and what areas it relates to. For instance, using AI in critical infrastructure or education could place this particular use case in a high-risk category.

Procedural Measures

Implement risk management, data governance, and technical documentation procedures. The more high-risk, the more emphasis should be placed on refining these processes. The company’s place in the value chain also matters—as a provider, you should consider those further down when preparing documentation and ensuring its accessibility.

Compliance Monitoring

Stay updated with regulatory changes and monitor compliance. The regulations will continue to evolve, and it is essential to stay on top of them. Compliance tools may be a great help in this process.

Collaborative Compliance - an Essential Aspect of the AI Act

Collaborative compliance with the EU AI Act necessitates an interdisciplinary approach, integrating legal, technical, and compliance expertise. Companies must establish a clear organizational structure for AI governance, determining whether responsibilities lie with AI officers, compliance officers, or privacy officers.

Given the complexity and scope of AI regulations, high-level endorsement from C-level executives is crucial to ensure robust support and resources for compliance efforts. This multidisciplinary approach fosters collaboration across departments, enabling a cohesive strategy that includes the preparation of AI system inventories, risk assessments, and implementation plans.

By combining legal analysis with technical solutions—whether developed in-house or through third-party vendors—organizations can effectively navigate the compliance landscape, ensuring that all facets of AI governance are adequately addressed.

EU AI Act and Challenges and Opportunities for Companies

The EU AI Act presents both challenges and opportunities for companies navigating the evolving regulatory landscape. One significant challenge is the lack of detailed guidelines, as the Act mandates certain procedures and measures without clearly defining standards for acceptable accuracy or robust data governance frameworks.

This leaves businesses in a position where they must interpret what constitutes compliance, relying on technical standards that are yet to be fully developed. However, an opportunity lies in the overlap between the AI Act and existing regulations like the GDPR.

As Paula Skrzypecka points out, companies that have already established compliance with GDPR's rigorous data protection standards may find it easier to adapt to the AI Act's requirements, as both regulations emphasize similar principles, such as minimizing biases and ensuring human oversight in high-risk AI systems.

This alignment can provide a strong foundation for businesses to build upon, facilitating a smoother transition to full compliance with the new AI regulations.

Get a Full Overview of EU AI Act

We hope this summary gave you an idea of the changes the EU AI Act brings for both professionals and companies and what you should have in mind when using AI for business automation. To get even deeper insights, watch the whole recording of the AI Waves webinar, where the experts elaborate on each of the essential topics mentioned here.

![[header] super app design principles & best practices](https://www.miquido.com/wp-content/uploads/2026/01/header-super-app-design-principles-best-practices-432x288.jpg)

![[header] top ai fintech companies transforming finance in 2025](https://www.miquido.com/wp-content/uploads/2025/05/header-top-ai-fintech-companies-transforming-finance-in-2025-432x288.jpg)