- Home

- AI Glossary

- What is a Recurrent Neural Network: Definition

What is a Recurrent Neural Network: Definition

What is a Recurrent Neural Network: Definition

What is a Recurrent Neural Network

If you’re asking “what is a recurrent neural network?” — here’s the answer in plain terms:

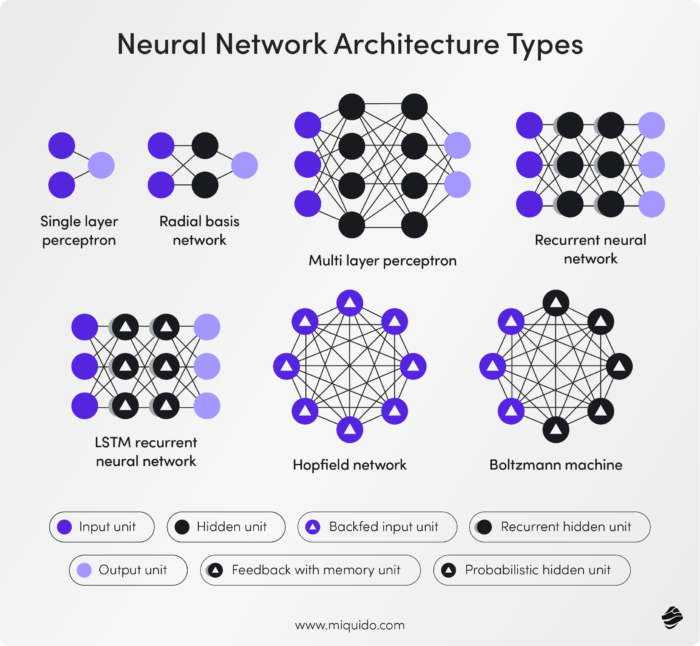

A Recurrent Neural Network (RNN) is a type of artificial neural network designed to recognize patterns in sequences of data — like time series, text, audio, or video. Unlike feedforward neural networks that process inputs in a linear fashion, moving from input to output without considering any sequence or memory, RNNs have a memory. They remember information from earlier inputs and use it to influence current processing. That makes them uniquely suited for tasks where order, timing, and context matter.

At the heart of an RNN is a loop — this feedback connection allows the network to pass information from one step of the sequence to the next. As a result, RNNs can learn how current inputs relate to previous ones, making them ideal for understanding sequences, trends, and dependencies that evolve over time.

Why RNNs Matter

What makes recurrent neural networks special is their ability to model sequential data in a way traditional models can’t. In everyday applications, this includes:

- Language Translation: Understanding how words relate to each other in a sentence

- Speech Recognition: Interpreting spoken language in real time

- Time Series Forecasting: Predicting future values based on past data

- Text Generation: Writing coherent and context-aware content

- Machine Translation: Handling sequential data for text generation and translation

Because of their memory-driven design, RNNs are widely used in natural language processing (NLP), where context is critical, and in any domain where past events influence future ones. RNNs play a significant role in language modeling, learning from past inputs and making context-based predictions.

How RNNs Work

To understand what a recurrent neural network is, it helps to look at how it functions. An RNN processes one element of a sequence at a time. At each step, it takes in input, updates its internal state (memory), and produces an output. This internal state captures relevant information from previous steps, so the network can build a contextual understanding over time. RNNs utilize information from previous inputs to maintain a hidden state, capturing dependencies across time.

Advanced forms of RNNs include:

- Bidirectional RNNs (BRNNs): These look at the sequence both forward and backward, allowing the model to consider future context along with past input. Bidirectional recurrent neural networks process data in both forward and backward directions, incorporating both past and future context.

- LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit): These architectures solve key issues in standard RNNs, especially the vanishing gradient problem, which can make it difficult to learn long-term patterns. The input gate in LSTMs regulates the influx of new information into the cell. The output gate determines what information should be output from the memory cell.

- The output layer is the part of the RNN that produces the final results after processing input data through the hidden layers.

Challenges of Recurrent Neural Networks

While RNNs are powerful, they’re not without limitations. Training them effectively requires careful design. Common challenges include:

- Vanishing or exploding gradients, which make it hard to learn from long sequences. However, sequential data processing is crucial for capturing temporal dependencies in sequences.

- Slow training times, especially with longer inputs. Traditional neural networks like feedforward and convolutional neural networks excel in scenarios with fixed input and output sizes but struggle with sequential data.

- Difficulty capturing very long-term dependencies, even with improvements like LSTM or GRU

Researchers continue to explore ways to improve training stability, reduce computation, and enhance performance — including combining RNNs with Convolutional Neural Networks (CNNs) or replacing them entirely with newer models like Transformers.

What is a Recurrent Neural Network: In Summary

So, what is a recurrent neural network?

It’s a memory-enabled neural network that excels at understanding sequences. Whether you’re working with language, audio, or time-based data, RNNs bring the ability to learn from context, retain relevant information, and make predictions based on more than just the present moment.

They’re foundational tools in machine learning, especially where timing, order, and meaning evolve over time — and while they come with challenges, their ability to model real-world patterns makes them a core part of modern AI systems.

Ready to discover more terms?