Prompt Tuning Definition

What is Prompt Tuning?

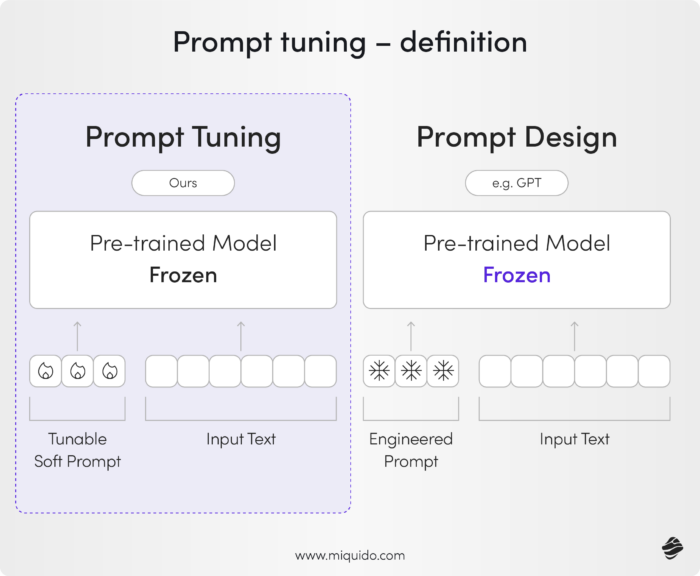

Prompt-tuning is an efficient, low-cost way of adapting large AI foundation models to new downstream tasks without retraining the model or updating its weights, guiding the model toward the desired output. Instead of modifying the model’s billions or even trillions of parameters, prompt-tuning focuses on creating task-specific cues - called prompts - that guide the model toward desired outputs. This involves processing input data alongside these prompts to generate accurate and relevant responses. This technique dramatically reduces computational costs, energy usage, and time while still leveraging the vast knowledge these foundation models have acquired during pretraining.

Foundation models, such as GPT, Gemini, Mistral, or Claude, have been pretrained on massive datasets encompassing a wide range of knowledge. These pre-trained models can be further adapted to handle specific tasks more efficiently. While these models are general-purpose by design, they can be specialized for tasks like contract analysis, fraud detection, or sentiment analysis. Traditionally, fine-tuning was the go-to method for customizing foundation models. This process, known as fine-tuning, involved retraining parts of the model on labeled task-specific data. However, as models have grown exponentially larger, fine-tuning has become more resource-intensive, prompting the rise of more sustainable alternatives like prompt-tuning.

How Does Prompt-Tuning Work?

Prompt-tuning involves crafting specific input prompts - either manually or using AI-designed embeddings - that contextualize the task for the model. These prompts act as instructions, or cues, that steer the model to generate task-specific predictions or decisions. By giving the AI model a specific persona or context, it can enhance its relevance and efficiency.

- Manual Prompts (Hard Prompts): Originally, prompt-tuning relied on manually written instructions. For example, to adapt a language model for translation, a simple input like “Translate English to French: cheese” would prompt the model to respond with “fromage.” While effective for some tasks, manual prompts often require significant trial and error to achieve optimal results.

- AI-Designed Prompts (Soft Prompts): More advanced prompt-tuning methods replace hand-crafted prompts with embeddings—strings of numbers that are trained by AI. These embeddings, injected into the model’s input layer, are task-specific but don’t alter the model itself. This technique leverages the capabilities of large language models to handle complex tasks efficiently. The optimization of these prompts can be achieved without changing the model parameters, making the process more efficient.

Key Benefits of Prompt-Tuning

- Efficiency: Since the model remains “frozen” (its parameters are unchanged), prompt-tuning eliminates the need for retraining. This reduces computing and energy usage by up to 1,000 times compared to fine-tuning, saving thousands of dollars. This approach is known as parameter efficient fine tuning, which optimizes the training process with reduced computational costs.

- Low Data Requirements: Prompt-tuning is particularly useful for tasks with limited labeled data. Prompts essentially act as substitutes for additional training data by distilling the model’s knowledge for specific use cases.

- Scalability Across Domains: Prompts can be tailored for diverse tasks, such as analyzing legal contracts, detecting fraud, or summarizing documents, without needing separate models for each task. This allows the model to excel at specific tasks by creating tailored prompts for each application.

Emerging Applications and Techniques for Large Language Models

Prompt-tuning originated with large language models but has since expanded to other types of foundation models, such as those handling audio, video, and image data. These large language models (LLMs) are particularly well-suited for prompt tuning due to their extensive pretraining. For example, prompts may include snippets of text, streams of speech, or blocks of pixels in images. This allows the model to be tailored for a specific task, enhancing its performance in specialized applications.

Here are some emerging areas where prompt-tuning is making an impact:

- Multi-Task Learning: Researchers are developing universal prompts that can adapt to multiple tasks, enabling models to pivot quickly from one application to another.

- Continual Learning: New techniques like CODA-Prompt allow models to learn new tasks incrementally without forgetting previous ones, solving the problem of catastrophic forgetting. This is particularly useful in dynamic environments where tasks evolve over time.

- AI Bias Mitigation: Prompt-tuning is also being used to address algorithmic biases inherent in large models. For example, IBM’s FairIJ and FairReprogram methods use AI-designed prompts to reduce gender and racial biases in decision-making tasks, such as salary predictions or image classifications.

More advanced prompt-tuning methods replace hand-crafted prompts with embeddings—strings of numbers that are trained by AI. These embeddings, also known as soft prompts, are designed to optimize the model's input sequences.

Advantages and Challenges

While prompt-tuning is more cost-effective and sustainable than fine-tuning, it has some limitations. One major drawback is the lack of interpretability in AI-designed prompts. Despite this, it remains a powerful tool in natural language processing, enabling models to generate accurate and relevant responses. These embeddings—strings of numbers optimized by the AI - are opaque, meaning researchers and developers cannot easily understand how or why they work. However, this approach avoids the need to fine-tune all of the model's parameters, making it more efficient. Despite this, the advantages of prompt-tuning in terms of speed, cost savings, and adaptability outweigh these concerns in most applications.

The Future of Prompt-Tuning

As foundation models become increasingly central to enterprise AI applications, from drug discovery to customer service, prompt-tuning is poised to play a crucial role in their deployment. Its ability to reduce costs, speed up customization, and enable sustainable AI practices makes it an essential tool for businesses and researchers alike. Moreover, ongoing research into multi-task and continual learning, as well as efforts to make soft prompts more interpretable, promise to expand the capabilities of prompt-tuning even further. This ongoing research often involves fine-tuning specific components to enhance the model's adaptability.

In summary, prompt-tuning offers a fast, low-cost, and sustainable way to customize powerful AI models for specialized tasks. By adapting the input prompts rather than retraining the entire model, organizations can rapidly deploy tailored solutions, reduce environmental impact, and stay ahead in the ever-evolving AI landscape. This approach focuses on optimizing prompt parameters to guide the model effectively.

Ready to discover more terms?