LLM JAILBREAK DEFINITION

What is AI Jailbreak?

LLM Jailbreak refers to techniques that bypass the safety and ethical guidelines built into large language models (LLMs) like GPT or other AI systems. These guidelines are put in place by developers to prevent the AI from generating harmful, offensive, or unethical content. Jailbreaking exploits weaknesses in the system, allowing users to trick the AI into producing restricted responses. Jailbreak attacks exploit these vulnerabilities in LLMs, highlighting the continuous cat-and-mouse game between attack strategies and defensive measures.

LLM Jailbreaking refers to the act of circumventing the built-in ethical guidelines, safety measures, and content restrictions of large language models (LLMs), such as OpenAI’s GPT or Google’s Bard. These guidelines exist to prevent the AI from generating harmful, illegal, unethical, or otherwise inappropriate content. However, through sophisticated manipulation of prompts and inputs, users can trick the model into producing outputs that are normally restricted.

LLM Jailbreak exploits vulnerabilities in an LLM’s training and response mechanisms, enabling it to bypass its safety protocols. While some users engage in jailbreaking for research and testing purposes, others use it to generate harmful, misleading, or prohibited content.

How Does Jailbreaking Work in Large Language Models?

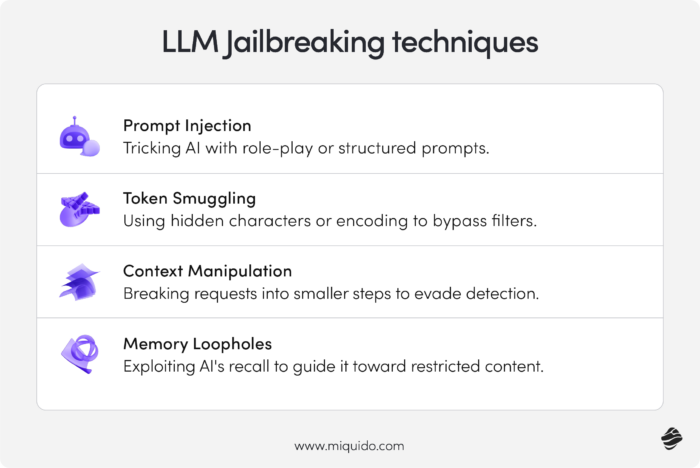

Jailbreaking an LLM typically involves crafting prompts or instructions that override the AI’s built-in safety restrictions. Jailbreak prompts are specific, manipulated inputs designed to exploit vulnerabilities in large language models (LLMs) and bypass safety mechanisms. There are several methods by which users achieve this:

1. Prompt Injection Jailbreaking Attacks

- A user feeds an AI carefully structured prompts that cause it to ignore or override its restrictions. Malicious prompts are often used in these prompt injection attacks to exploit vulnerabilities and trick the model into producing harmful outputs.

- Example: Asking the model to “role-play” or pretend to be an entity without restrictions, which can sometimes lead it to generate prohibited content.

2. Token Smuggling and Encoding Tricks

- Users insert hidden characters, spacing techniques, or encoded words to fool the AI’s filters.

- Example: Instead of directly asking for dangerous content, they may use spaced-out letters, phonetic spelling, or Unicode obfuscation.

3. Context Manipulation and Chain Prompting

- A multi-step method where users break down a request into smaller, seemingly harmless components and then reassemble them into restricted content.

- Example: First asking the AI to describe a process in general terms and then requesting more specific steps until it inadvertently provides restricted information. This type of context manipulation can also lead to harmful responses, as the AI might be progressively guided to generate inappropriate or dangerous content through a sequence of manipulative interactions.

4. Leveraging AI’s Self-Consistency and Memory Loopholes

- Some models recall previous parts of a conversation, allowing users to gradually lead them into giving inappropriate responses.

- Example: Initially discussing ethical hacking techniques and then shifting the conversation towards unethical practices without explicitly asking for them upfront.

Why is LLM Jailbreaking a Concern?

LLM jailbreaking poses ethical, security, and societal risks. Generating harmful content is a significant issue, as attackers use various techniques to manipulate LLMs into producing illegal activities and hate speech. The main concerns include:

1. Spread of Misinformation and Fake News

- Jailbroken models can be exploited to generate false or misleading information at scale, contributing to misinformation campaigns.

2. Generating Harmful Content or Dangerous Content

A jailbroken LLM might provide instructions for illegal activities, cybercrime, self-harm, or other dangerous behaviors. This harmful content can include generating illicit instructions and hate speech, showcasing the security vulnerabilities in LLMs due to their conflicting goals of helpfulness and safety.

3. Ethical and Legal Violations

- Producing biased, offensive, or discriminatory content could lead to reputational damage for organizations deploying LLMs and potential legal consequences.

4. AI-Powered Cybersecurity Threats

- Cybercriminals could exploit jailbroken LLMs for phishing, fraud, or social engineering attacks, making it easier to deceive individuals and organizations.

How Can LLM Jailbreaking Be Prevented with Safety Measures?

Developers, researchers, and users must take proactive steps to prevent jailbreaking and mitigate its risks. Here are some key approaches:

1. Improved AI Training and Fine-Tuning

- Developers continuously train models on adversarial prompts to identify and close loopholes that could lead to jailbreaking.

- Reinforcement learning with human feedback (RLHF) helps refine AI responses and reject harmful queries.

2. Advanced Content Moderation Techniques

- AI companies deploy content filtering tools and real-time monitoring to detect and block LLM jailbreak attempts. Safety guardrails are also essential protective measures implemented to prevent harmful or inappropriate content generation.

- Implementation of heuristic-based and AI-driven moderation systems that analyze prompts for potential LLM jailbreak strategies.

3. Regular Security Audits and Red-Teaming

- Conducting rigorous stress tests using simulated attacks to identify vulnerabilities before they can be exploited. The attack success rate (ASR) is a critical metric in this context, as it measures the proportion of successful simulated attacks to the total attempts, highlighting the effectiveness of these security audits.

- Encouraging ethical hackers and security researchers to report vulnerabilities through bug bounty programs.

4. User Awareness and Ethical AI Usage

- Educating users about the risks of AI misuse and the ethical implications of LLM jailbreaking.

- Promoting responsible AI usage through policy enforcement and legal frameworks that penalize malicious misuse.

5. Real-Time AI Supervision

- Implementing dynamic AI monitoring that detects unusual behavior and modifies responses accordingly.

- Creating fallback mechanisms that restrict further interactions if a LLM jailbreak attempt is detected.

Conclusion: Addressing the Challenge of LLM Jailbreaking

LLM jailbreaking is a growing concern in the AI landscape, posing risks to ethical AI deployment, cybersecurity, and misinformation control. While jailbreaking techniques evolve, so too must countermeasures designed to safeguard LLMs from exploitation. A successful jailbreak can bypass these safety measures, leading to harmful outputs and necessitating continuous advancements in defense strategies.

Developers must remain vigilant, continuously refining models to strengthen their defenses. At the same time, users and organizations must promote ethical AI use to ensure these powerful technologies serve humanity responsibly. By maintaining a balance between accessibility and security, we can foster an AI-driven future that upholds trust, fairness, and safety.

Ready to discover more terms?