- Home

- AI Glossary

- What is an AI Token: Definition

What is an AI Token: Definition

What is an AI Token: Definition

What is an AI Token?

In the field of AI, a token is a fundamental unit of data that is processed by algorithms, especially in natural language processing and machine learning services. A token in AI is essentially a component of a larger data set, which may represent words, characters, or phrases. For example, when processing text, a sentence is divided into tokens, where each word or punctuation mark is considered a separate token in AI. This process of tokenisation is a crucial step in preparing data for further processing in AI model processes.

AI tokens are not restricted to text alone. They can represent various data forms and play a crucial role in AI’s ability to understand and learn from them. For instance, in computer vision, an AI token may denote an image segment, like a group of pixels or a single pixel. Similarly, in audio processing, a token might be a snippet of sound. This flexibility of AI tokens makes them essential in AI’s ability to interpret and learn from different data forms. Understanding AI token limits is also crucial for optimizing the cost-effectiveness and functional efficiency of AI deployments.

AI Tokens Explained

Tokens play an essential role in AI systems, particularly in machine learning models that involve language tasks. In such models, AI tokens serve as inputs for algorithms to analyse and learn patterns. For instance, in a chatbot development, each word in the user’s input is treated as an AI token, which helps the AI understand and respond appropriately. It is also important to count tokens in text to estimate processing time and costs, as different tokenization methods can affect the count.

In advanced AI models like transformers, AI tokens are even more crucial. These models process tokens collectively, enabling the AI to understand context and nuances in language. This understanding is critical for tasks like translation, sentiment analysis, and content generation.

How Tokens Work in Generative AI and Natural Language Processing

Tokens are fundamental to how generative AI models interpret input, predict output, and maintain context within a fixed context window. The typical process involves:

- AI Tokenization: The model divides input text into AI tokens, which can be entire words, subwords, or single characters, depending on the tokenization strategy. This process is a crucial part of natural language processing (NLP), where text is broken down into smaller units called tokens.

- Embedding: Each token in AI is converted into a numerical vector that the model can process.

- Processing & Prediction: Using transformer-based architectures, the model predicts the next AI token based on probability distributions, generating content step by step.

- Decoding & Output: The model selects the most likely AI token sequence and converts it back into human-readable text or other forms of content.

For example, in GPT models, when you input: “Tell me a joke about AI.” The model might tokenize this into: [“Tell”, “me”, “a”, “joke”, “about”, “AI”, “.”]. It then processes these AI tokens to generate a coherent response.

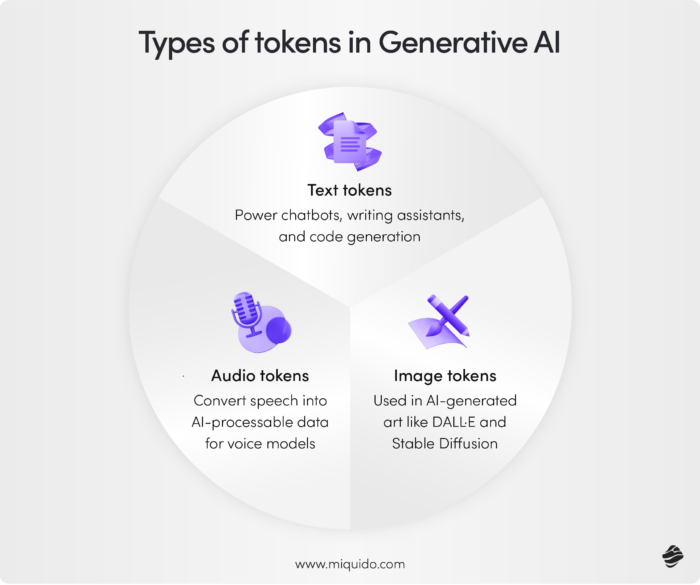

Types of Tokens in Generative AI

- Text Tokens: Used in LLMs to generate human-like responses in applications like chatbots, writing assistants, and code generation tools.

- Image Tokens: Applied in models like DALL·E and Stable Diffusion, where images are broken down into token-like structures for AI-driven art generation.

- Audio Tokens: Utilized in AI voice models, where spoken words are converted into tokenized representations for processing and generation. Handling more tokens enhances the capabilities of these models, allowing for more efficient processing and generation of human language by advanced AI systems.

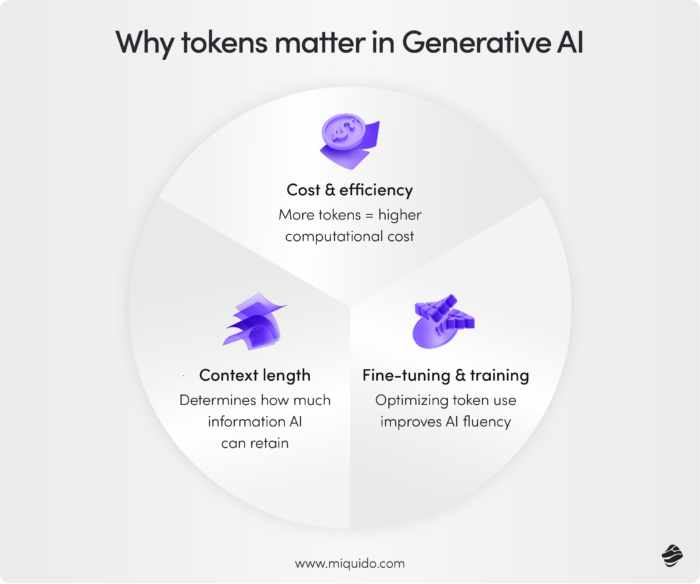

Why Tokens Matter in Generative AI

- Cost & Efficiency: AI models often charge based on AI token usage; more AI tokens equate to higher computational costs. Understanding token limits is crucial as they directly impact operational expenses and efficiency.

- Context Length: The number of AI tokens a model can process affects its ability to maintain context and coherence in responses.

- Fine-Tuning & Training: Developers optimize models by adjusting how AI tokens are processed, enhancing fluency and relevance. AI tokens play a fundamental role in natural language processing and generative AI, serving as the building blocks for models like ChatGPT.

Advancements in AI Tokenization and Token Limits

Recent research has explored alternative tokenization methods to improve the performance of language models. For instance, the paper “Learn Your Tokens: Word-Pooled Tokenization for Language Modeling“ proposes a strategy that utilizes word boundaries to pool characters into word representations, which are then processed by the model. This approach has shown improvements in language modeling tasks, particularly with rare words.

Another study, “Tokenization Is More Than Compression,” examines various design decisions in tokenization, highlighting the importance of pre-tokenization and the benefits of using Byte-Pair Encoding (BPE) to initialize vocabulary construction. The findings suggest that effective tokenization goes beyond mere data compression and plays a crucial role in model performance.

What are AI tokens: a summary

As AI continues to evolve, efficient AI tokenization methods are essential for processing larger datasets with improved accuracy, making generative AI models more powerful and versatile.

In summary, AI tokens are basic yet powerful units of data in AI development. They are foundational elements that allow algorithms to process and learn from various data types, such as text, images, and sounds. The token AI concept is crucial for various AI applications, from simple text processing to complex tasks involving understanding context and subtleties in human language.

Ready to discover more terms?