AI Auditing Frameworks

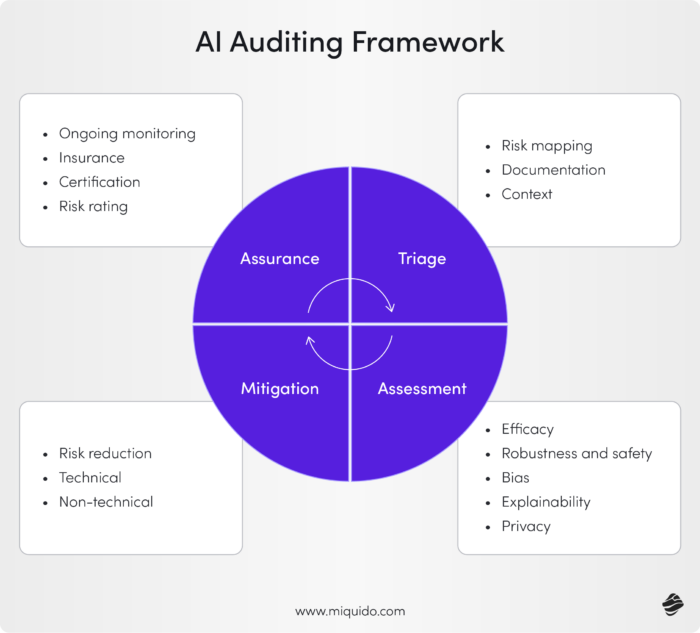

AI Auditing Framework

An AI auditing framework, which includes conducting an AI audit, provides a structured approach to evaluate, monitor, and validate the ethical, technical, and legal aspects of AI systems. It helps organizations ensure that their AI solutions are fair, accountable, transparent, and compliant with regulations. With the increasing reliance on AI in high-stakes environments, such as healthcare, finance, and public services, auditing frameworks have become essential to address risks like bias, data misuse, and lack of transparency.

Why Is an AI Auditing Framework Important?

AI systems are incredibly powerful, but they are not without risks. Proper oversight is required throughout the entire AI life cycle to ensure ethical practices and compliance. Without proper oversight, AI can reinforce biases, make opaque decisions, or violate data privacy laws. The EU AI Act, GDPR, and OECD AI Principles are just a few examples of regulations and guidelines that demand accountability for AI systems.

Key reasons to use an AI auditing framework include:

- AI Bias Mitigation: AI models can inadvertently perpetuate societal or historical AI biases. Auditing frameworks help identify and reduce these biases.

- Regulatory Compliance: Compliance with laws like GDPR (for data privacy) or emerging AI-specific laws ensures your organization avoids legal risks.

- Trust and Transparency: AI audits help stakeholders (customers, employees, regulators) trust the AI systems by ensuring they are explainable and reliable.

- Risk Management: By identifying risks early, businesses can prevent financial, reputational, or legal harm caused by poorly designed or implemented AI systems.

- Alignment with Ethical Principles: Ensures AI is used responsibly, adhering to ethical values like fairness, accountability, and inclusiveness.

Core Components of an AI Auditing Framework

An AI auditing framework evaluates AI systems across several dimensions to ensure they meet technical, legal, and ethical standards. Below are the key components:

1. Training Data Auditing

- Data Quality: Assess whether the training data used to train AI models is accurate, complete, and up-to-date.

- Bias in Data: Identify potential biases in the dataset, such as underrepresentation of certain groups or skewed historical data.

- Privacy and Security: Ensure that the data complies with data protection laws (e.g., GDPR, CCPA) and is securely stored and processed.

2. Algorithm Transparency and Explainability

- Model Transparency: Ensure that the AI model’s decision-making process is understandable to stakeholders. Use techniques like model interpretability tools (e.g., SHAP or LIME).

- Explainability: For high-stakes applications (e.g., finance, healthcare), the AI model must provide clear explanations of how it arrived at a decision.

- Black Box Risks: Evaluate the risks associated with “black box” models, which make decisions that are difficult to interpret.

3. Performance and Accuracy Auditing

- Model Accuracy: Test the AI system’s accuracy using benchmark datasets and real-world scenarios.

- Stress Testing: Evaluate the model’s performance under various conditions, including edge cases, rare scenarios, and adversarial inputs.

- Ongoing Monitoring: Establish processes for continuous monitoring and evaluation of AI performance after deployment. A structured audit process is essential to ensure the accuracy and reliability of AI systems.

4. Bias and Fairness Assessment

- Fair Outcomes: Ensure that the AI system does not produce unfair outcomes or discriminate against specific groups. Algorithmic bias can have a significant impact on the fairness and equity of AI system outcomes.

- Equality Audits: Test for disparate impact by comparing how the AI system performs for different demographic groups.

- Ethical Alignment: Verify that the AI system aligns with ethical principles, such as fairness, non-discrimination, and diversity inclusion.

5. Governance and Accountability

- Roles and Responsibilities: Define who is accountable for the AI system, including model developers, business leaders, and external stakeholders.

- AI Governance Policies: Establish clear policies and internal controls for the development, deployment, and monitoring of AI systems.

- Compliance Documentation: Keep records of model development, testing, and deployment to demonstrate compliance with laws and regulations.

Security and Resilience in AI Systems

- Robustness: Ensure the AI system can handle adversarial attacks, such as maliciously manipulated inputs designed to confuse the model.

- Cybersecurity: Evaluate the system for vulnerabilities and security risks that could be exploited by hackers.

- System Failures: Plan for contingencies and fallback mechanisms in case the AI system fails.

7. Ethical and Social Impact Assessment

- Ethical AI Guidelines: Audit the system against ethical guidelines, such as Microsoft’s principles of fairness, accountability, and transparency. The human factor is crucial in ensuring that AI systems align with ethical guidelines.

- Impact on Stakeholders: Assess how the AI system impacts various stakeholders (e.g., customers, employees, society at large).

- Long-Term Implications: Evaluate potential unintended consequences, including environmental, social, or economic impacts.

8. Compliance with AI Regulations

- Legal Standards: Check compliance with applicable AI laws, such as the EU’s AI Act, GDPR, or sector-specific regulations (e.g., healthcare or finance). Employing multiple frameworks can enhance compliance and provide a more comprehensive evaluation of AI systems.

- Auditable Records: Maintain thorough documentation of the AI lifecycle, including data sources, training processes, and deployment practices.

- Third-Party Reviews: Engage independent auditors or certification bodies to validate compliance.

Steps to Implement an AI Auditing Framework

- Identify AI Use Cases: Start by determining which AI systems in your organization need auditing. Prioritize high-stakes systems, such as those in finance, healthcare, or HR.

- Assemble an Audit Team: Create a multidisciplinary team that includes data scientists, legal experts, ethicists, and business leaders to conduct the audit. An internal audit can provide valuable insights and ensure that the auditing process is thorough and effective.

- Establish Baselines: Define what success looks like. For instance, set benchmarks for accuracy, fairness, and compliance metrics.

- Evaluate Pre-Deployment: Before deployment, assess the AI model’s data, algorithms, and governance structure to identify potential risks.

- Deploy Continuous Monitoring: Implement tools to track model performance, bias, and compliance in real time after deployment.

- Engage Third-Party Auditors: Use external experts to conduct unbiased reviews of your AI systems, especially for high-stakes applications.

- Iterate and Improve: Use audit findings to refine your AI systems. Regularly update models, policies, and governance practices to adapt to new risks and regulations.

Best Practices for AI Auditing

- Start with Transparency: Ensure that AI systems are built with transparency in mind, from data collection to model outputs.

- Incorporate Stakeholders: Include input from affected stakeholders—customers, employees, and regulators—during the auditing process. Internal auditors play a crucial role in ensuring that the auditing process is comprehensive and effective.

- Automate Monitoring: Use AI governance tools like IBM OpenScale, Google Model Cards, or Microsoft’s Responsible AI Dashboard for continuous monitoring and auditing.

- Align with Ethical Standards: Adopt frameworks such as the OECD AI Principles, Microsoft’s AI Ethics Guidelines, or Google’s Responsible AI Principles to guide your audits.

- Regularly Update Policies: AI systems evolve, and so do risks. Regularly update your auditing framework to address emerging challenges, such as explainability for generative AI or edge AI risks.

AI Auditing Framework Use Cases

- Hiring and HR: Audit AI-driven hiring tools to ensure they don’t discriminate based on gender, race, or age. AI auditing frameworks are essential for various applications of artificial intelligence, including hiring, financial services, healthcare, retail, and law enforcement.

- Financial Services: Evaluate credit-scoring models to ensure fair lending practices and compliance with financial regulations.

- Healthcare: Audit AI tools used for diagnostics to ensure accuracy and eliminate biases against underrepresented groups.

- Retail: Check personalization algorithms to ensure fair and inclusive recommendations for all customers.

- Law Enforcement: Audit facial recognition systems to ensure accuracy across different demographic groups and avoid wrongful identifications.

Benefits of an AI Auditing Framework

- Builds Trust: Increases trust among customers, employees, and regulators.

- Ensures Fairness: Reduces biases and promotes fair outcomes.

- Enhances Compliance: Keeps AI systems aligned with current and emerging laws.

- Mitigates Risks: Identifies vulnerabilities and helps manage risks before they cause harm.

- Improves Transparency: Makes AI systems more explainable and easier to understand.

Building Trustworthy AI with Audits

An AI auditing framework is essential for organizations that want to harness AI responsibly, ethically, and legally. By focusing on transparency, fairness, governance, and compliance, organizations can create AI systems that not only deliver business value but also gain the trust of their stakeholders.

As AI becomes increasingly complex, regular audits, external reviews, and continuous monitoring will be the key to ensuring that AI systems remain robust, secure, and aligned with ethical principles. Building AI responsibly isn’t just good practice—it’s the foundation for sustainable innovation in the AI-driven future.

Ready to discover more terms?